Email scams used to be laughably obvious. Broken English, desperate pleas from fictional royalty, promises of inherited fortunes. Not anymore. In 2025, scammers wield AI that can clone voices in three seconds and generate videos indistinguishable from reality.

The Numbers Don’t Lie

| Metric | 2023 | 2025 |

| Deepfake files created | 500,000 | 8 million |

| Detection accuracy | 98% | 65% |

| Annual global losses | Growing | $50 billion+ |

Deepfakes now cause one in every 20 identity verification failures. A finance worker recently transferred $25 million after a video call with their “CFO”, who was actually an AI impersonation. Retailers face over 1,000 AI-generated scam calls daily. This isn’t science fiction.

The Confidence Gap

Here’s the uncomfortable reality: 60% of people think they can spot deepfakes. Only 0.1% actually can when tested. Meanwhile, 70% admit they can’t distinguish real voices from clones. The people most confident in their detection abilities are often the easiest marks.

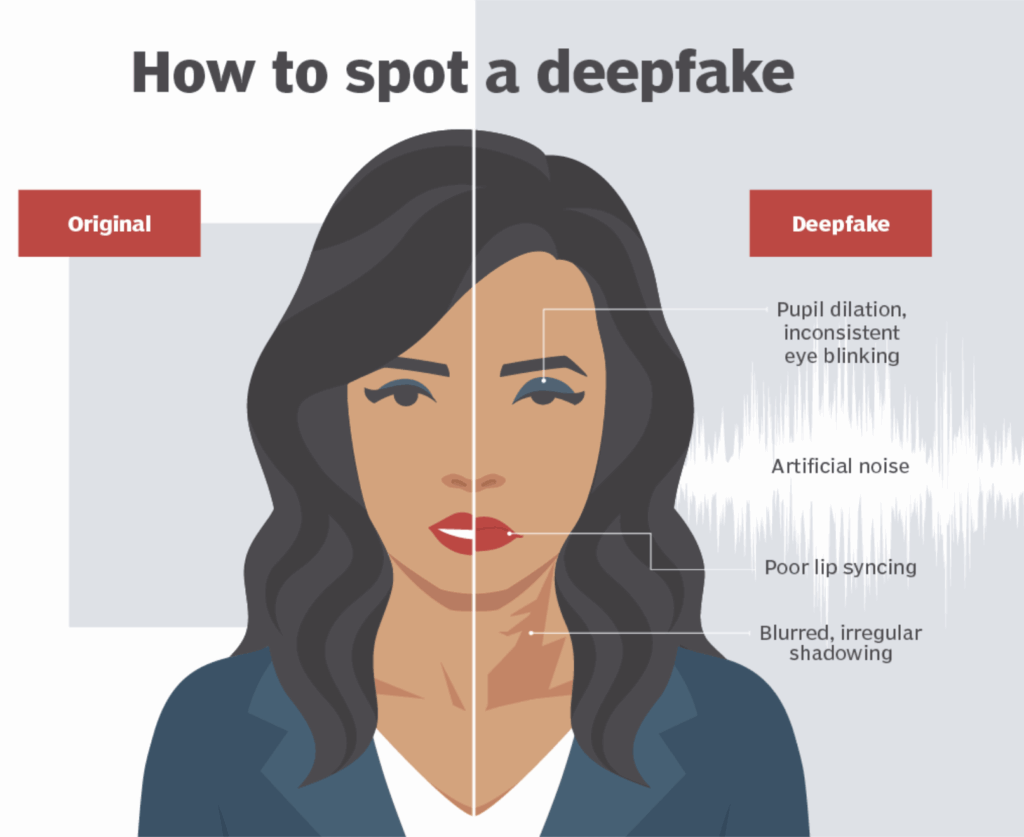

Spotting the Fakes

Video Warning Signs

Facial anomalies:

- Unnaturally smooth or oddly wrinkled skin

- Inconsistent lighting and shadows

- Abnormal or absent blinking patterns

- Lip movements mismatched to words

- Unnatural facial hair textures

Technical glitches:

- Asymmetrical features (different sized eyes, mismatched earrings)

- Distorted hands or wrong finger counts

- Flickering around face edges

- Emotional expressions contradicting tone

Audio Red Flags

- Robotic cadence with awkward pauses

- Uneven sentence flow

- Strange background noise or echoing

- Vocal inflections that don’t match the context

- Missing natural speech patterns

Your Defense Protocol

1. Verification Rules

Never trust the contact method provided in suspicious messages.

| Suspicious Contact | Your Response |

| Urgent email from “boss” | Call their known number |

| Family emergency text | Use the saved contact to call back |

| Video call requesting money | Hang up, verify independently |

| Voice message needing action | Contact through official channels |

Create a family code word. Something memorable but not social-media-guessable. Use it to confirm identity during unexpected calls.

2. Challenge Requests

Ask specific questions only the real person would know:

- Reference recent conversations

- Mention inside jokes

- Query about shared experiences

- Bring up personal details not online

Legitimate emergencies can wait 60 seconds for verification. Fake ones collapse under scrutiny.

3. Recognize Manipulation Tactics

Scammers manufacture urgency. They create artificial deadlines, invoke emotional crisis, and pressure immediate compliance. Real colleagues don’t demand instant wire transfers without documentation. Actual family members understand caution during emergencies.

Immediate red flags:

- “Act now or lose everything”

- “Don’t tell anyone about this”

- “We need this in the next 10 minutes”

- “Verify by sending money first”

4. Protect Your Digital Presence

Every video you post trains potential deepfakes. Every clear photo becomes source material. Every voice recording is cloning data.

Privacy checklist:

- Review social media settings monthly

- Limit public access to photos and videos

- Watermark personal content

- Set up Google Alerts for your name

- Consider what strangers can harvest from your profiles

5. Security Fundamentals

| Security Layer | Implementation |

| Multi-factor authentication | All important accounts |

| Unique passwords | Use a password manager |

| Software updates | Enable automatic updates |

| Video conference security | Require 2FA, use encryption |

| Platform consistency | Be suspicious of sudden platform changes |

6. Verify Content Before Sharing

That shocking video of a politician? That celebrity endorsement? Before hitting share, check multiple reliable sources. Look for poor grammar on the hosting page, verify dates and locations, and question anything that seems outlandish.

When You Encounter a Deepfake

Immediate actions:

- Report to the hosting platform

- Document everything (screenshots, URLs, timestamps)

- Contact law enforcement for fraud, blackmail, or defamation

- Notify your network to prevent the spread

Preventive measures:

- Watermark personal photos

- Monitor your digital footprint

- Alert contacts about potential impersonations

The Reality Check

Deepfake technology advances faster than detection methods. No magic solution exists. But scammers still rely on basic social engineering: manufactured urgency, emotional manipulation, and exploiting human trust.

Your best defense? Pause. Breathe. Verify through independent channels. Ask questions. Trust your instincts when something feels wrong.

The finance worker who lost $25 million had one chance to stop and verify. They didn’t take it. You will.

In 2025, skepticism isn’t paranoia – it’s survival. Take 60 seconds to confirm identity before sending money, sharing information, or taking urgent action. Your future self will thank you for that moment of hesitation.